DeepSeek Releases Janus-Pro AI Image Generator

DeepSeek says Janus-Pro 7B outperforms OpenAI's Dall-E 3 and Stable Diffusion in several benchmarks. But is it really that good?

DeepSeek’s R-1 model has been making headlines globally for the past few days. It’s an open-source and affordable alternative to OpenAI’s o1 model. Yet, even before the buzz around R-1 has settled, the Chinese startup has unveiled another open-source AI image model called Janus-Pro.

DeepSeek says Janus-Pro 7B outperforms OpenAI’s Dall-E 3 and Stable Diffusion in several benchmarks. But is it really that good? Does it live up to the claims, or is this just another model riding the AI hype?

Let’s find out.

What is Janus-Pro?

In simple terms, Janus-Pro is a powerful AI model that can understand images and text and can also create images from text descriptions.

Janus-Pro is an enhanced version of the Janus model, designed for unified multimodal understanding and generation. It has a better training method, more data, and a larger model. It also delivers more stable outputs for short prompts, with improved visual quality, richer details, and the ability to generate simple text.

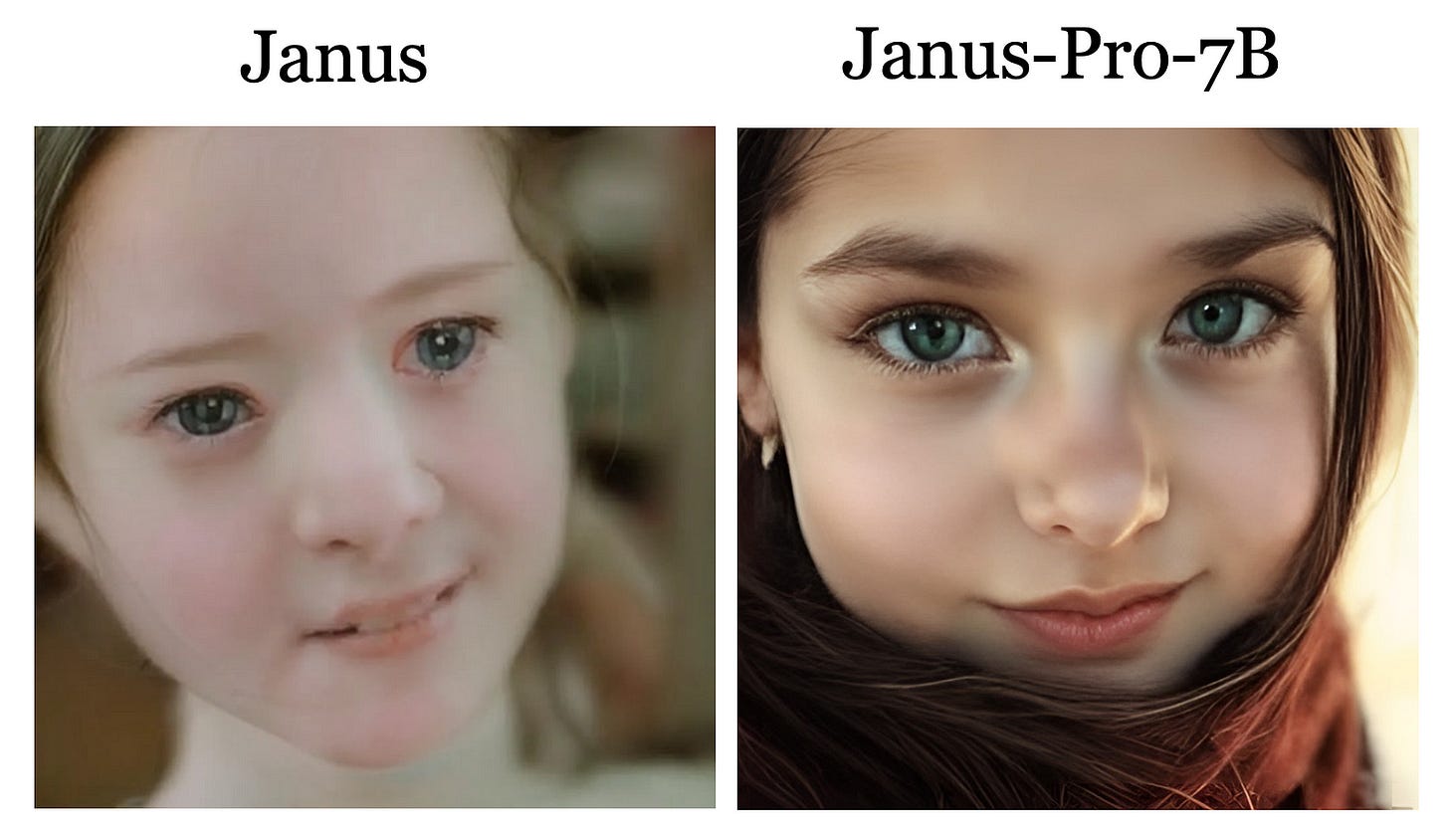

Here are some examples:

Prompt: The face of a beautiful girl

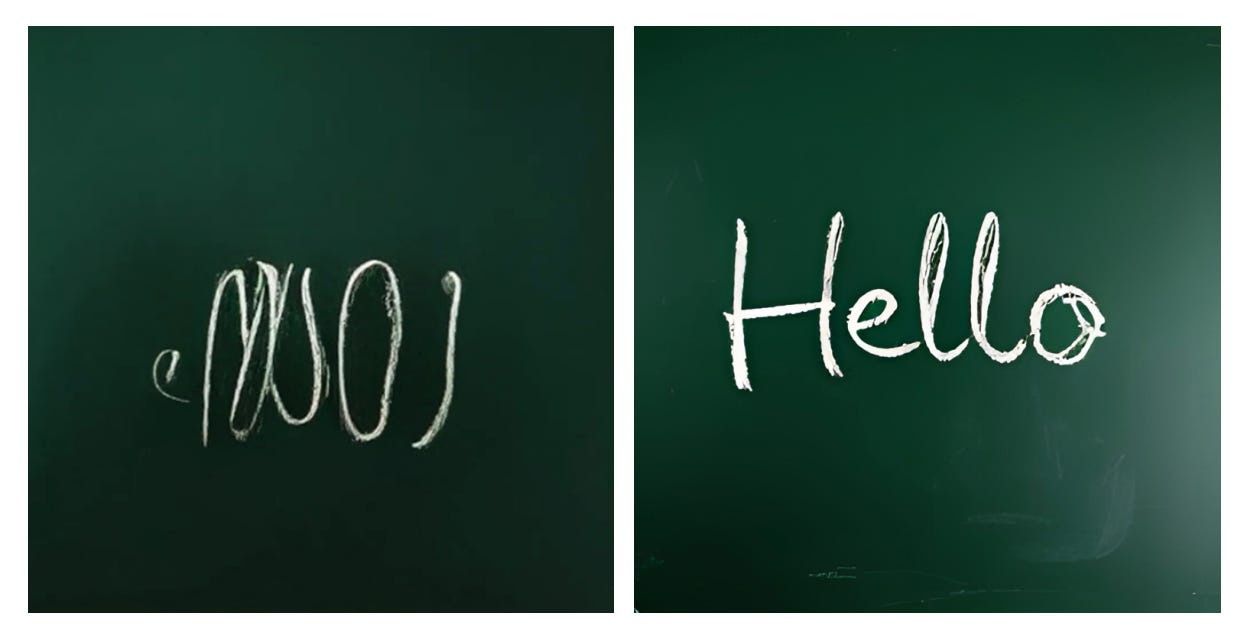

The newer model is also more capable at rendering texts.

Prompt: A clear image of a blackboard with a clean, dark green surface and the word ‘Hello’ written precisely and legibly in the center with bold, white chalk letters.

The Janus-Pro series includes two model sizes: 1 billion and 7 billion, demonstrating scalability of the visual encoding and decoding method. The image resolution generated by both models is 384 × 384.

In terms of commercial licensing, this model is available with a permissive license for both academic and commercial use.

Technical Details of Janus-Pro

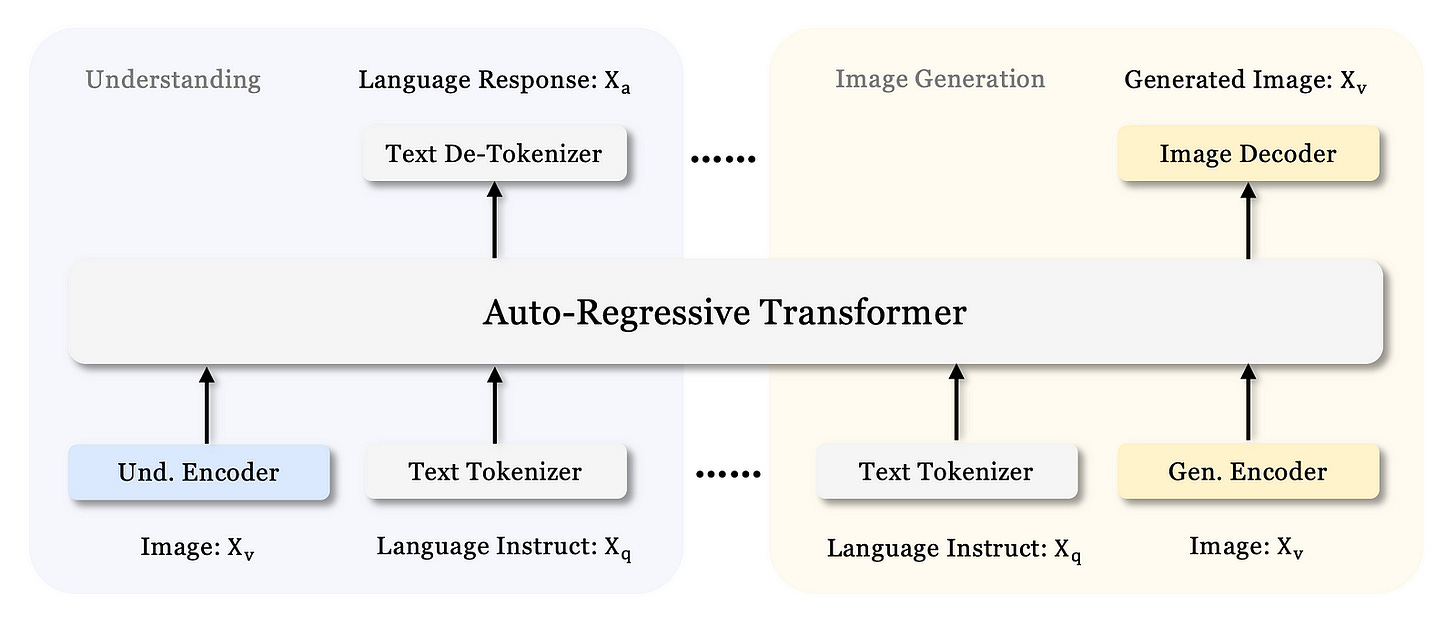

Janus-Pro uses separate visual encoding methods for multimodal understanding and visual generation tasks. This design aims to mitigate conflicts between these two tasks and improve overall performance.

For multimodal understanding, Janus-Pro uses the SigLIP encoder to extract high-dimensional semantic features from images, which are then mapped to the LLM’s input space via an understanding adaptor.

For visual generation, the model uses a VQ tokenizer to convert images into discrete IDs, which are then mapped to the LLM’s input space via a generation adaptor.

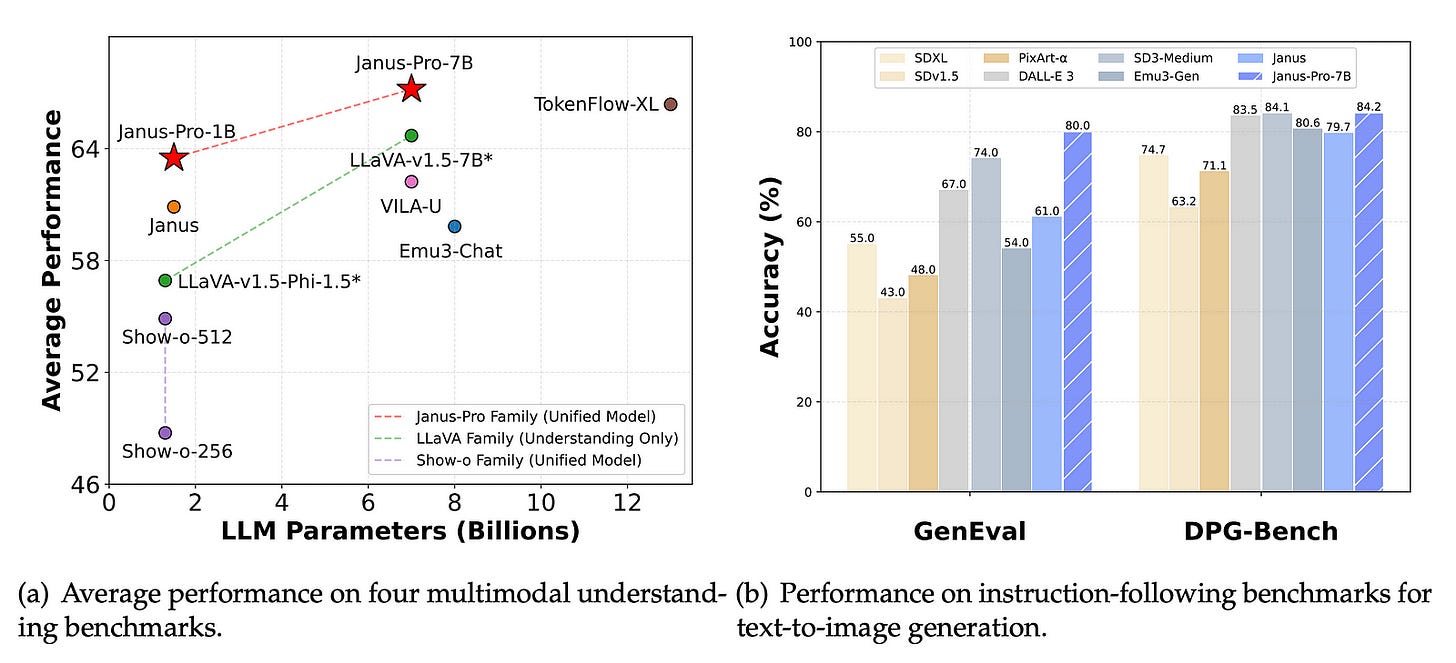

In text-to-image instruction following, Janus-Pro-7B scores 0.80 on the GenEval benchmark, outperforming other models, such as OpenAI’s Dall-E 3 and Stability AI’s Stable Diffusion 3 Medium.

Additionally, Janus-Pro-7B achieves a score of 84.19 on DPG-Bench, surpassing all other methods and demonstrating its ability to follow dense instructions for text-to-image generation.

Is Janus-Pro Better Than Dall-E 3 or Stable Diffusion?

According to the internal benchmarks from DeepSeek, both Dall-E 3 and Stable Diffusion models have scored less on GenEval and DPG-Bench benchmarks.

But I take this information with a grain of salt because of how the sample images look. The best way to prove it is to do my own tests. Let’s take a look at some examples below:

Prompt: A photo of a herd of red sheep on a green field.

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.