Qwen 3.5 Gets an Open-Source Release (Qwen3.5-397B-A17B)

The natively multimodal open source AI model boasts impressive benchmark scores.

The Alibaba Cloud team has officially released Qwen3.5-397B-A17B, the first open-weight model in their new Qwen 3.5 series. This is pretty huge because it introduces native vision-language architecture that processes text and images as a single, unified stream rather than separate components.

What makes this model unique is its efficiency: despite containing a massive 397 billion parameters, it utilizes a hybrid structure to activate only 17 billion per step. This allows it to deliver performance comparable to proprietary leaders like GPT-5.2 and Claude 4.5 Opus, finally placing “future-class” intelligence directly into the hands of open-source developers.

Let’s tear down the technicals to see if the hype matches the weights.

What’s new with Qwen3.5-397B-A17B

Qwen 3.5 distinguishes itself through a “hybrid” architecture that prioritizes efficiency without sacrificing raw intelligence.

The Hybrid Architecture (MoE + Linear Attention): The standout feature here is the efficiency ratio. The model boasts 397 billion total parameters, but thanks to a sparse Mixture-of-Experts (MoE) design, only 17 billion parameters are activated per forward pass.

You get the knowledge width of a massive model with the inference latency of a much smaller one.

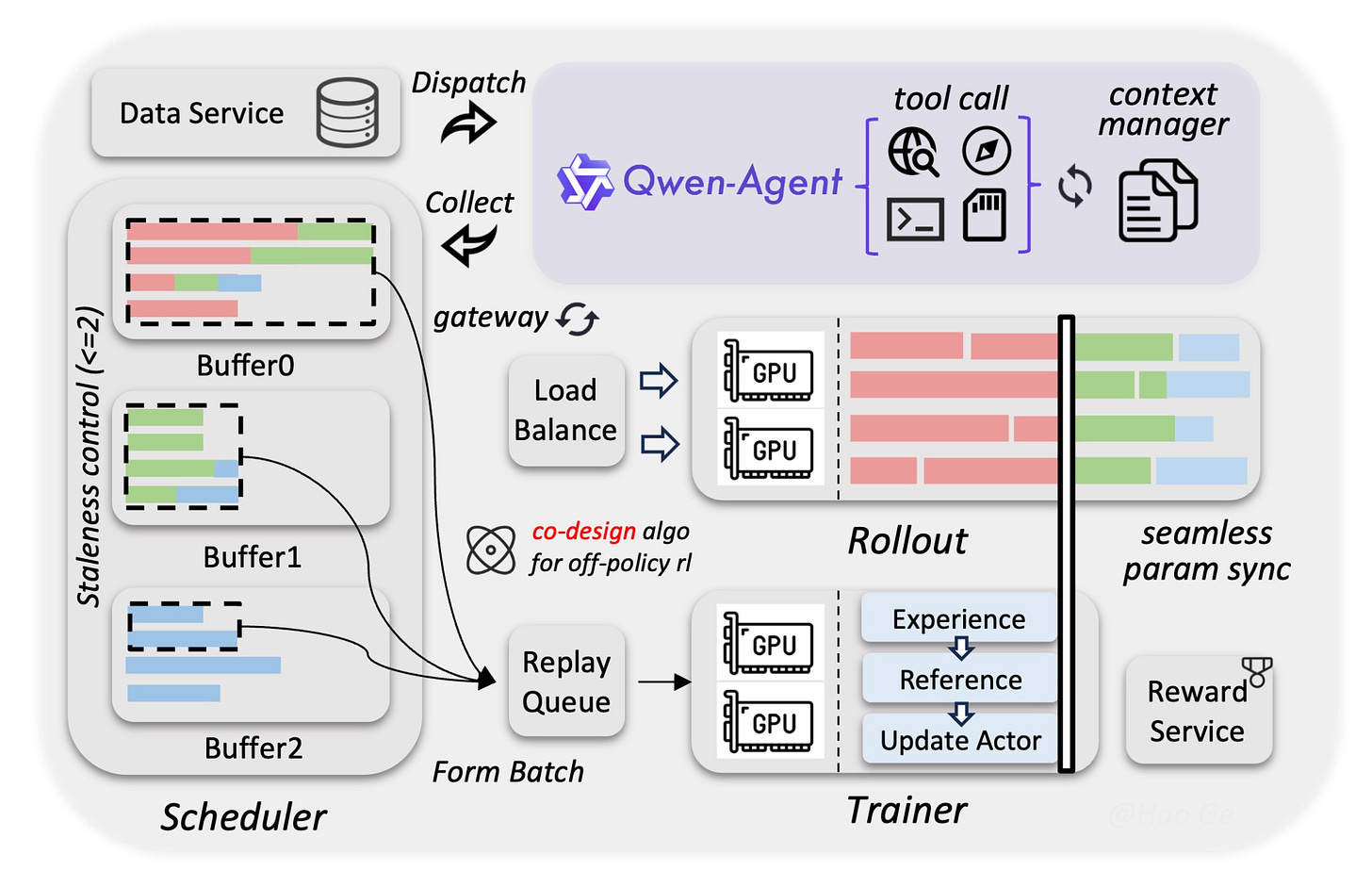

It utilizes Gated Delta Networks fused with the MoE architecture. This moves beyond standard Transformers, leveraging linear attention mechanisms to deliver high-throughput inference. The team claims decoding throughput is 8.6x to 19.0x higher than previous Qwen3-Max iterations.

Native Multimodality: Unlike models that stitch a vision encoder onto a text decoder (and often feel disjointed), Qwen 3.5 is a “native vision-language model”. It uses early fusion training on trillions of multimodal tokens.

Capability: It supports a 1M context window (in the Plus version) and can process video, images, and text seamlessly.

Infrastructure: The training pipeline achieved near 100% multimodal training efficiency compared to text-only baselines, a notoriously difficult engineering feat.

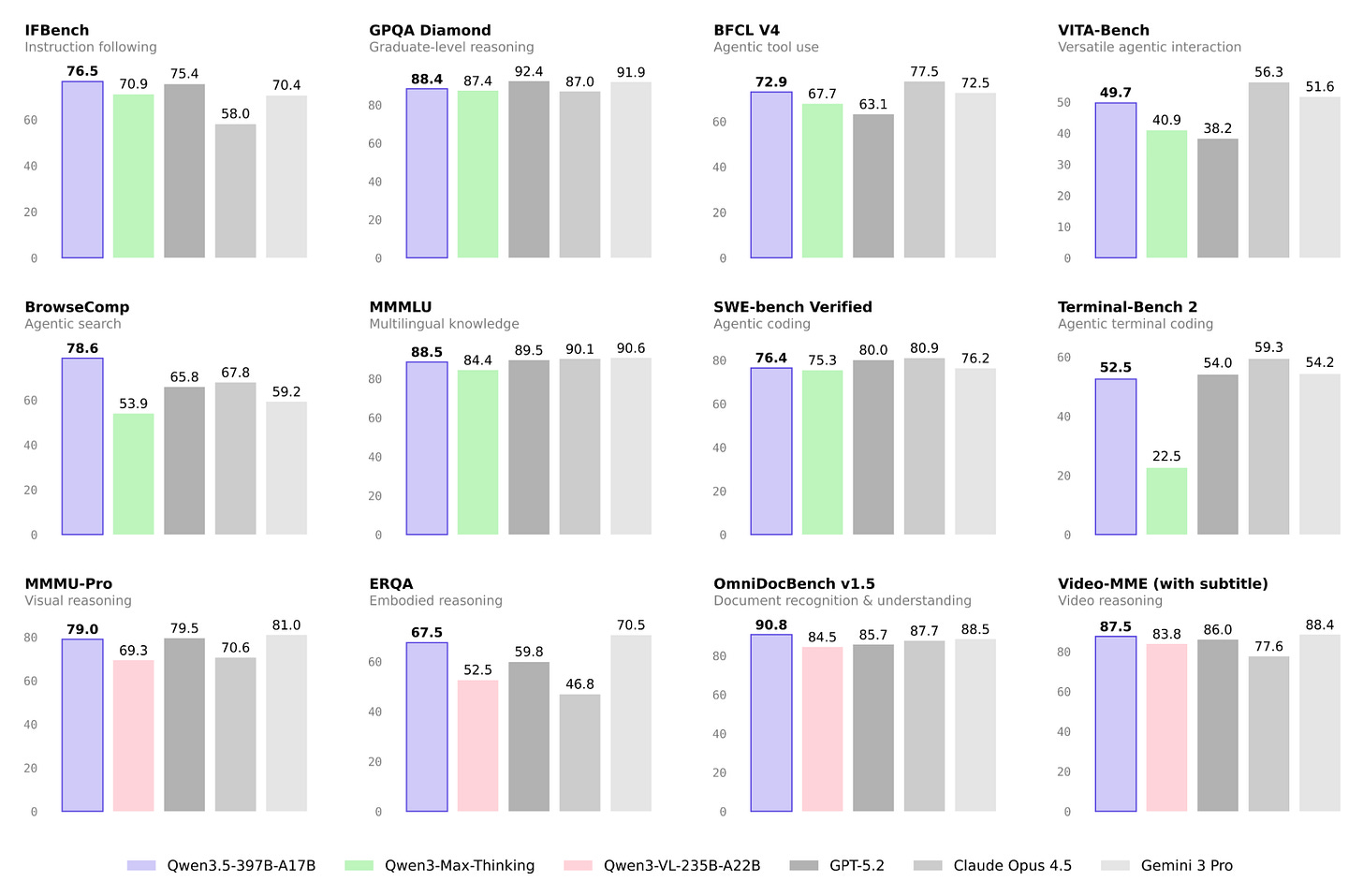

Performance vs. competitors: The benchmarks provided are aggressive. Qwen 3.5 reportedly outperforms or matches GPT-5.2 and Claude 4.5 Opus on critical metrics like MMLU-Redux (94.9) and MATH (via comparable STEM benchmarks).

Coding: In the LiveCodeBench v6, it scores 83.6, putting it in the same weight class as the proprietary leaders.

Multilingual: It supports 201 languages and dialects, a massive jump from previous versions, making it truly global.

How to get started with Qwen3.5-397B-A17B

The weights are live on the Hugging Face Hub. Look for Qwen/Qwen3.5-397B-A17B.

Hardware Requirements:

Warning: While only 17B parameters are active (making inference fast), the model sits on 397B parameters. You need massive VRAM to load the full weights, even if the compute cost is low.

Local Use: For consumer hardware (Apple Silicon, etc.), look for MLX or GGUF (llama.cpp) quantized versions which are supported out of the box.

Alibaba Cloud: If you lack the H100 cluster, you can accessQwen3.5-Plus via the Alibaba Cloud Model Studio API.

Chat: https://chat.qwen.ai

Hi there! Thanks for making it to the end of this post! My name is Jim, and I’m an AI enthusiast passionate about exploring the latest news, guides, and insights in the world of generative AI. If you’ve enjoyed this content and would like to support my work, consider becoming a paid subscriber. Your support means a lot!