ToonCrafter AI Tool Changes The Animation Industry Forever

A new era of animation is emerging with the help of artificial intelligence.

Some of our favorite anime TV series like Naruto, Dragon Ball Z, and Demon Slayer were all hand-drawn and took hundreds of hours of human labor to complete.

Today, it’s now as easy as feeding a couple of images into a tool, describing how the video should look, waiting for a few minutes, and boom! You have a unique anime sequence.

In this article, I’ll show you a free-to-use AI tool called ToonCrafter that has been getting a lot of attention in the AI community recently.

What is ToonCrafter?

ToonCrafter is a brand-new AI framework designed to bridge the gap between traditional cartoon animation and modern AI technology. It leverages pre-trained video diffusion models to create visually convincing and natural-looking animations.

This tool is perfect for those who want to create anime sequences without extensive drawing skills or manual labor. Imagine having two input sketches, and the AI predicts and fills in the video frames in between.

How does it work?

ToonCrafter’s architecture is built upon the open-sourced DynamiCrafter interpolation model, a state-of-the-art image-to-video generative diffusion model that demonstrates robust motion understanding for live-action interpolation but falls short when applied to cartoon animations.

The framework incorporates three key improvements for generative cartoon interpolation:

Toon Rectification Learning Strategy: This carefully designed strategy fine-tunes the spatial-related context understanding and content generation layers of the underlying image-conditioned video generation model on collected cartoon data, effectively adapting live-action motion priors to the cartoon animation domain.

Dual-Reference-Based 3D Decoder: This novel decoder compensates for lost details due to the highly compressed latent prior spaces, ensuring the preservation of fine details in interpolation results. It injects detailed information from input images into the generated frame latents using a cross-attention mechanism in shallow decoding layers and residual learning in deeper layers, considering computational cost burdens.

Frame-Independent Sketch Encoder: This flexible sketch encoder empowers users with interactive control over the interpolation results, enabling the creation or modification of interpolation results with temporally sparse or dense motion structure guidance.

If you want to learn more about the details of how it works, check out this whitepaper on ToonCrafter.

Try it yourself

There are two ways you can try this animation tool:

Run on your local PC (Windows)

Feel free to try running the tool on your local machine if you have a powerful GPU. In this article, I’ll show you how to use ToonCrafter via HuggingFace.

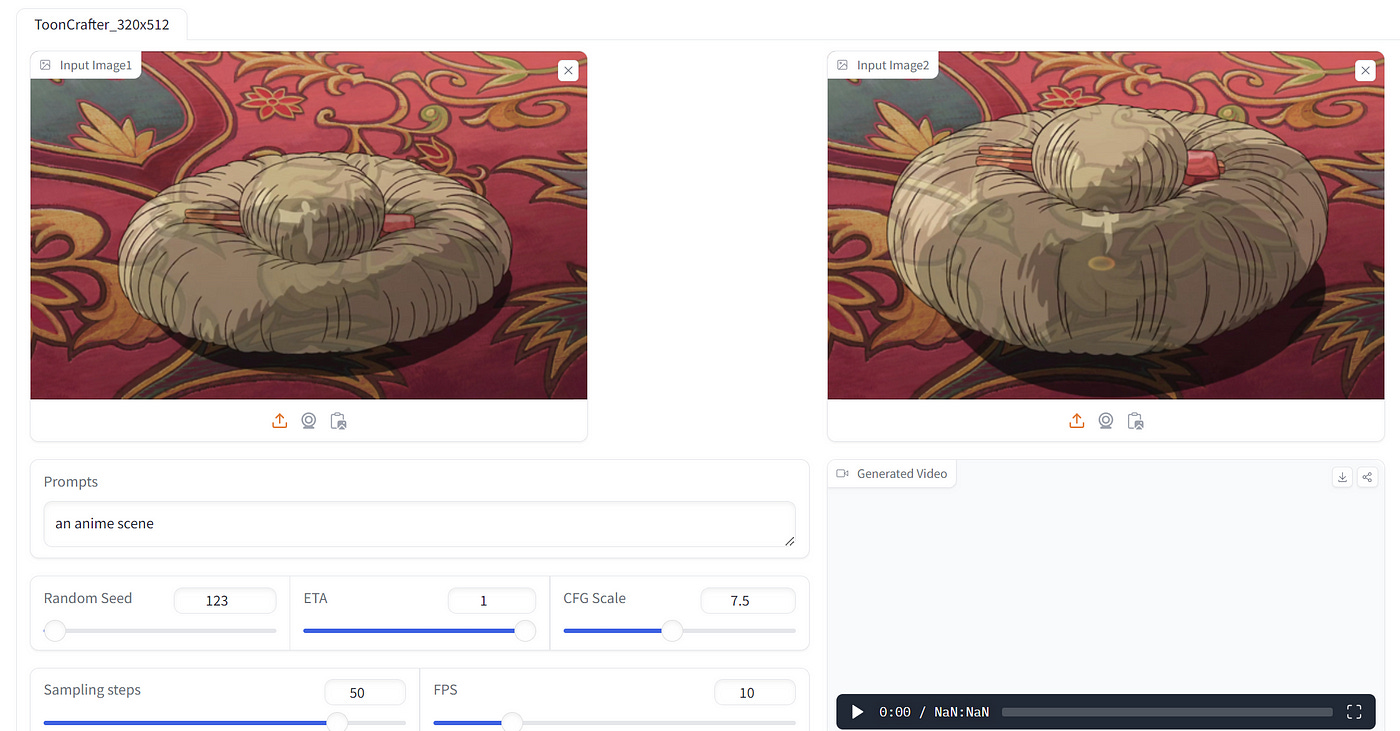

Head over to the ToonCrafter page on HuggingFace. The dashboard looks like this:

This setup requires you to upload two images to the two drop zones. Here are two image examples:

Next, adjust the parameters and set the text prompt. In this example, I used the prompt below:

an anime sceneClick on the “Generate” button and wait for the AI to complete the process in the background. The final video is a 512x320 file.

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.